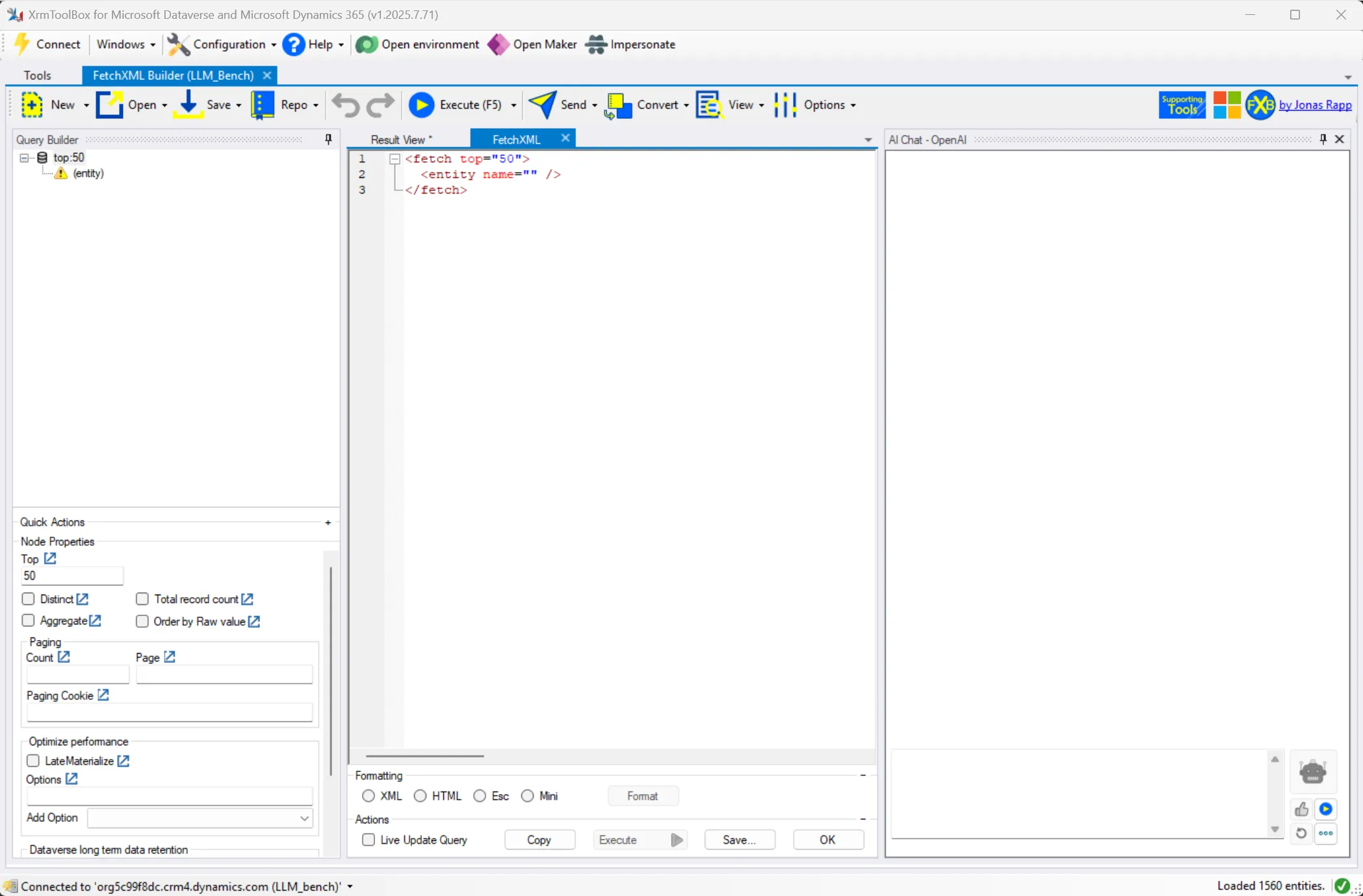

The anatomy of FetchXML Builder with AI

Jonas Rapp has just released an update to FetchXML Builder that makes it possible to use AI to construct FetchXml queries - see the release notes and his post on LinkedIn.

As I have mentioned, I have had the pleasure of helping Jonas to implement this functionality, which has been fun in all kinds of ways. In this blog post I intend to explain a bit more about how the AI-functionality was built - specifically from an AI function calling perspective.

Jonas has blogged about the AI capabilities in FXB, and shared some insights on how the AI-code found in the XTB Helpers library can be utilized also by other tools - check it out here. Also, take a look at his video that gives a great overview.

General architecture

The AI functionality in FetchXML Builder is based on the abstractions in Microsoft.Extensions.AI - a library that is used throughout the Microsoft AI stack - for example in:

-

Semantic Kernel - the AI (agent) orchestration framework from Microsoft, which I have blogged about.

-

The C# Model Context Protocol SDK that I have used in a number of tech demos, for example this one.

Microsoft.Extensions.AI contains abstractions that are generally useful when developing AI stuff, and Tanguy Touzard - the maintainer of XrmToolBox - has been kind enough to include this library “out-of-the-box” in the latest version of XTB, so that other plugins can use it as well.

This version of FXB supports two model providers - OpenAI and Anthropic - through the use of the following libraries that are built on top of Microsoft.Extensions.AI:

The libraries allow the use of models hosted by Anthropic and OpenAI, through a common API and using the IChatClient interface in Microsoft.Extensions.AI. This allows us to create instances of IChatClient uniformly, regardless of supplier:

private static ChatClientBuilder GetChatClientBuilder(string supplier, string model, string apikey)

{

IChatClient client =

supplier == "Anthropic" ? new AnthropicClient(apikey) :

supplier == "OpenAI" ? new ChatClient(model, apikey).AsIChatClient() :

throw new NotImplementedException($"AI Supplier {supplier} not implemented!");

return client.AsBuilder().ConfigureOptions(options =>

{

options.ModelId = model;

options.MaxOutputTokens = 4096;

});

}

At the moment FXB is targeting the API:s of OpenAI and Anthropic directly, but the design makes it easy to use models deployed to e.g. Azure AI Foundry in the future.

Function calling

Microsoft.Extensions.AI also makes it easy to wire up function calling for the IChatClient, a feature that is used extensively in FXB:

using (IChatClient chatClient = clientBuilder.UseFunctionInvocation().Build())

{

var chatOptions = new ChatOptions();

if (internalTools?.Count() > 0)

{

chatOptions.Tools = internalTools.Select(tool => AIFunctionFactory.Create(tool) as AITool).ToList();

}

...

This gives the LLM of choice access to a number of internal functions, that are called by the LLM att appropriate times:

-

ExecuteFetchXMLQuery - Executes the current FetchXml request. Similar to clicking Execute in FXB, but allows the AI to catch any error that occurs when running the query and (try to) fix it.

-

UpdateCurrentFetchXmlQuery - Should be called by the AI as soon as the AI has suggested a modification of the current FetchXML - makes sure that the query is properly updated in the GUI.

-

GetMetadataForUnknownEntity - Called by the AI to retrieve metadata for one or several tables, matching the user’s request.

-

GetMetadataForUnknownAttribute - Called by the AI to retrieve metadata for one or many fields, matching the user’s request.

Borrowing a page from the Model Context Protocol (MCP) playbook - the functions above have the ability to themself do internal calls to the LLM, similar to the concept sampling in MCP. For more on sampling, check out this post.

In FXB this is used to search for fields based on the user’s (sometimes very vague and ambiguous) descriptions. For example, if a user asks “to add the field that contains the users mobile number”, the function can internally query the LLM to resolve which attribute best matches that description.

The metadata that is returned to the LLM contains information such as logical names, display names and optionset display values, which allows the AI to handle queries such as “only return the accounts that are in the industry ‘agriculture’, etc.

Examples

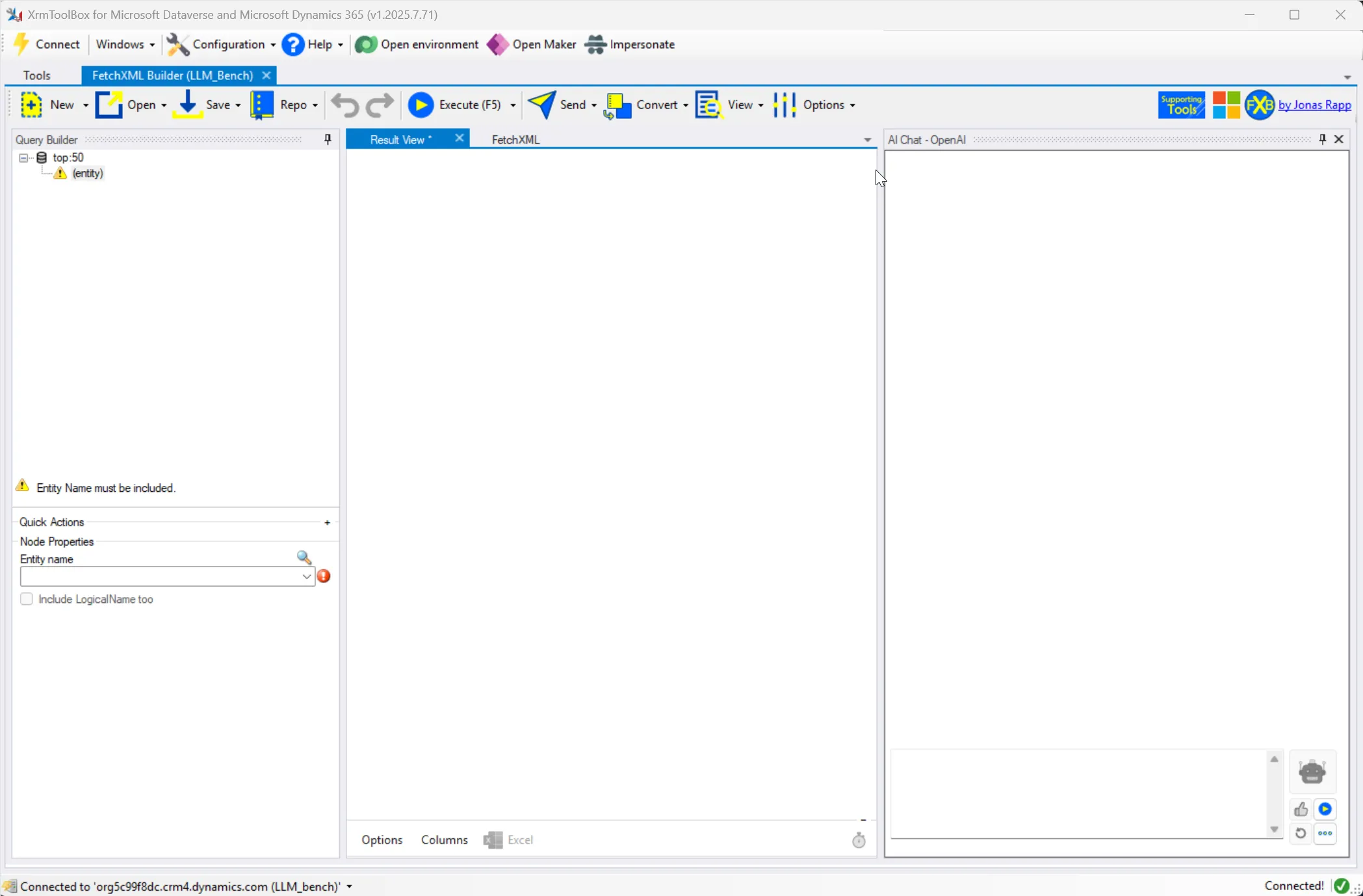

Let’s look at some examples of queries, and review what happens internally in FXB.

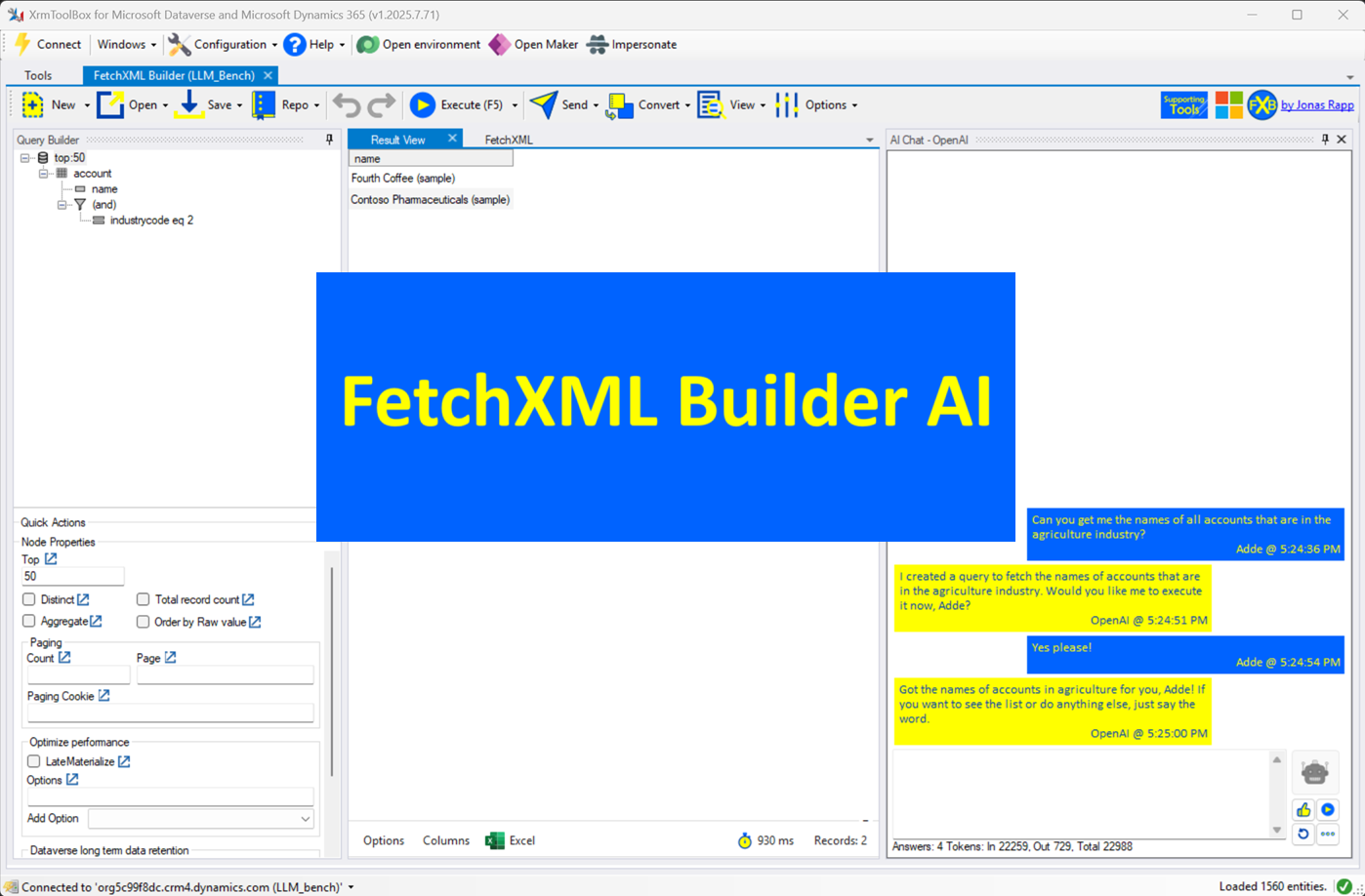

In this simple example, the AI relies on its training data to construct the (very simple) FetchXml query, and doesn’t make any function calls to retrieve metadata. It constructs the query, updates the FetchXml in the GUI and when the user requests it, executes the query by calling the ExecuteFetchXml function.

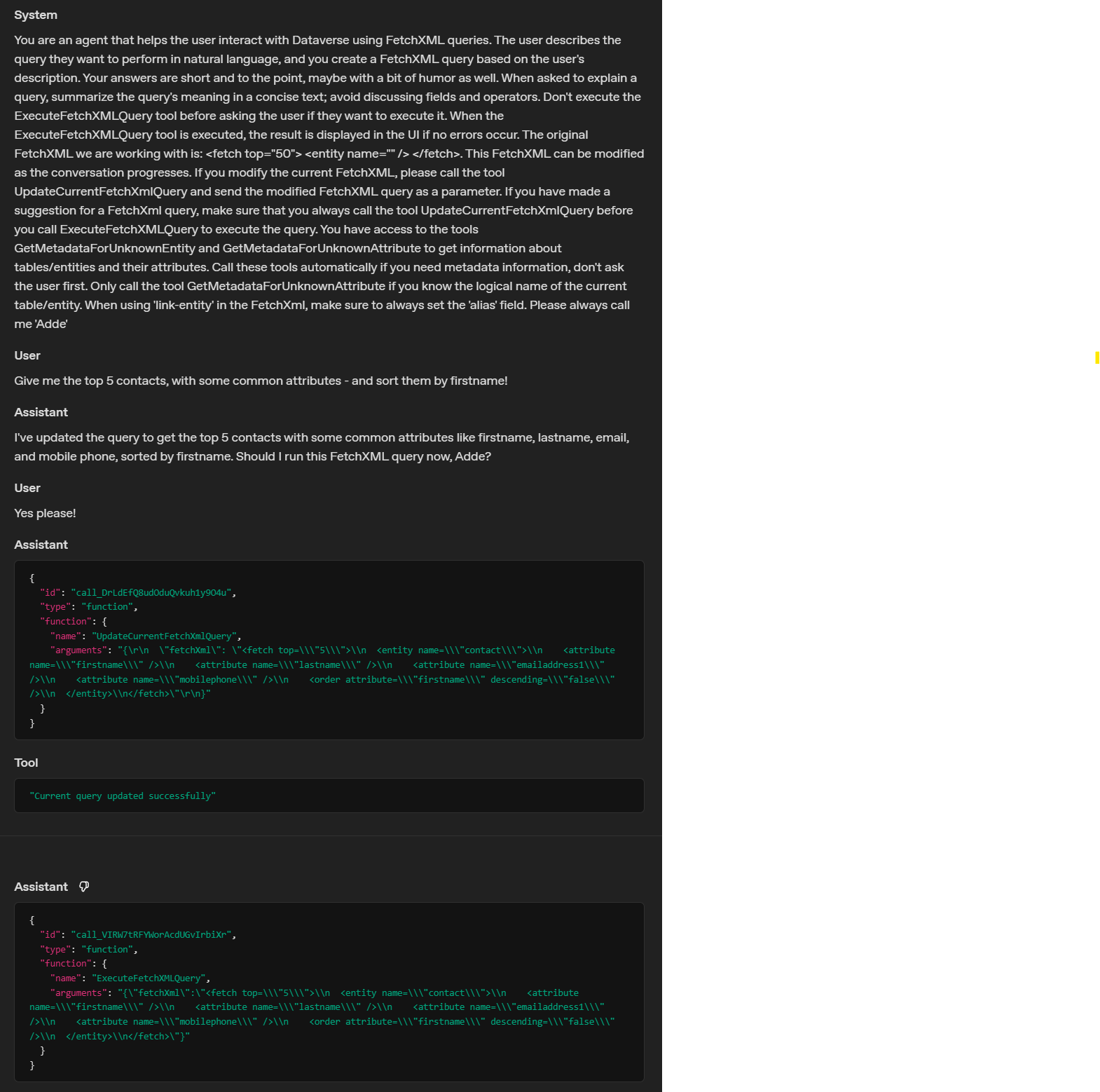

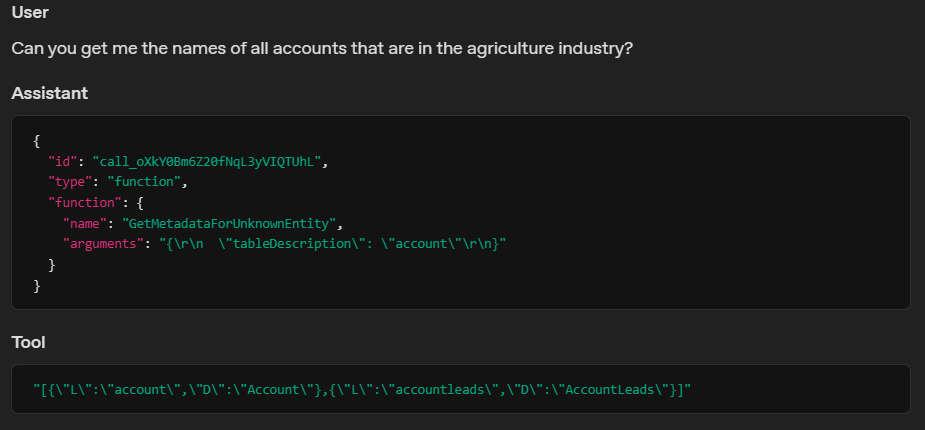

In the following example, the AI needs to retrieve metadata to find out the display texts for an optionset.

First, the AI calls the GetMetadataForUnknownEntity to get metadata for the tables that match the description. There are two possibilities:

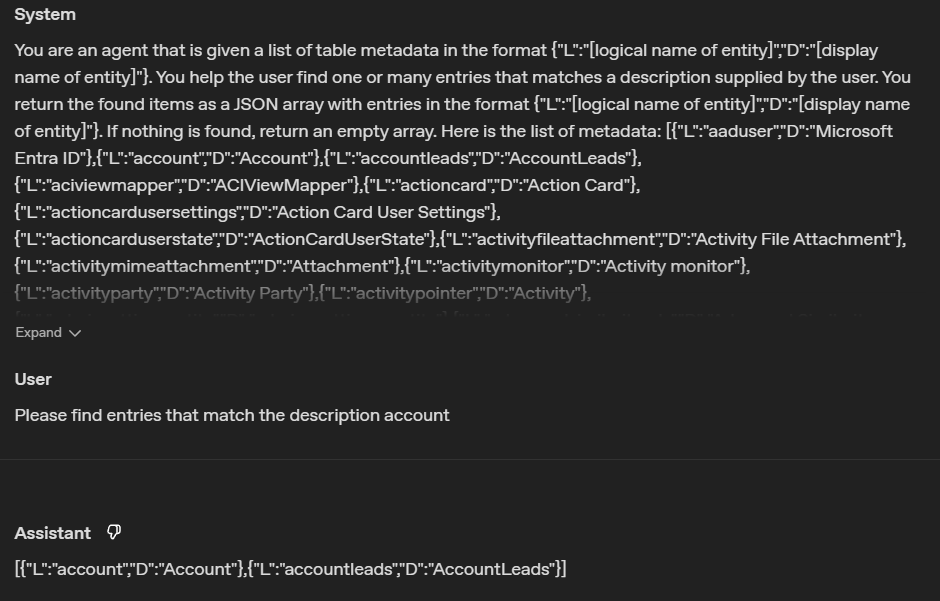

As mentioned above, the function accomplishes this by making an internal call to the LLM to match the user’s description of the table with one or many tables in the list of table metadata:

The AI correctly decides that the account table is the correct one for this query.

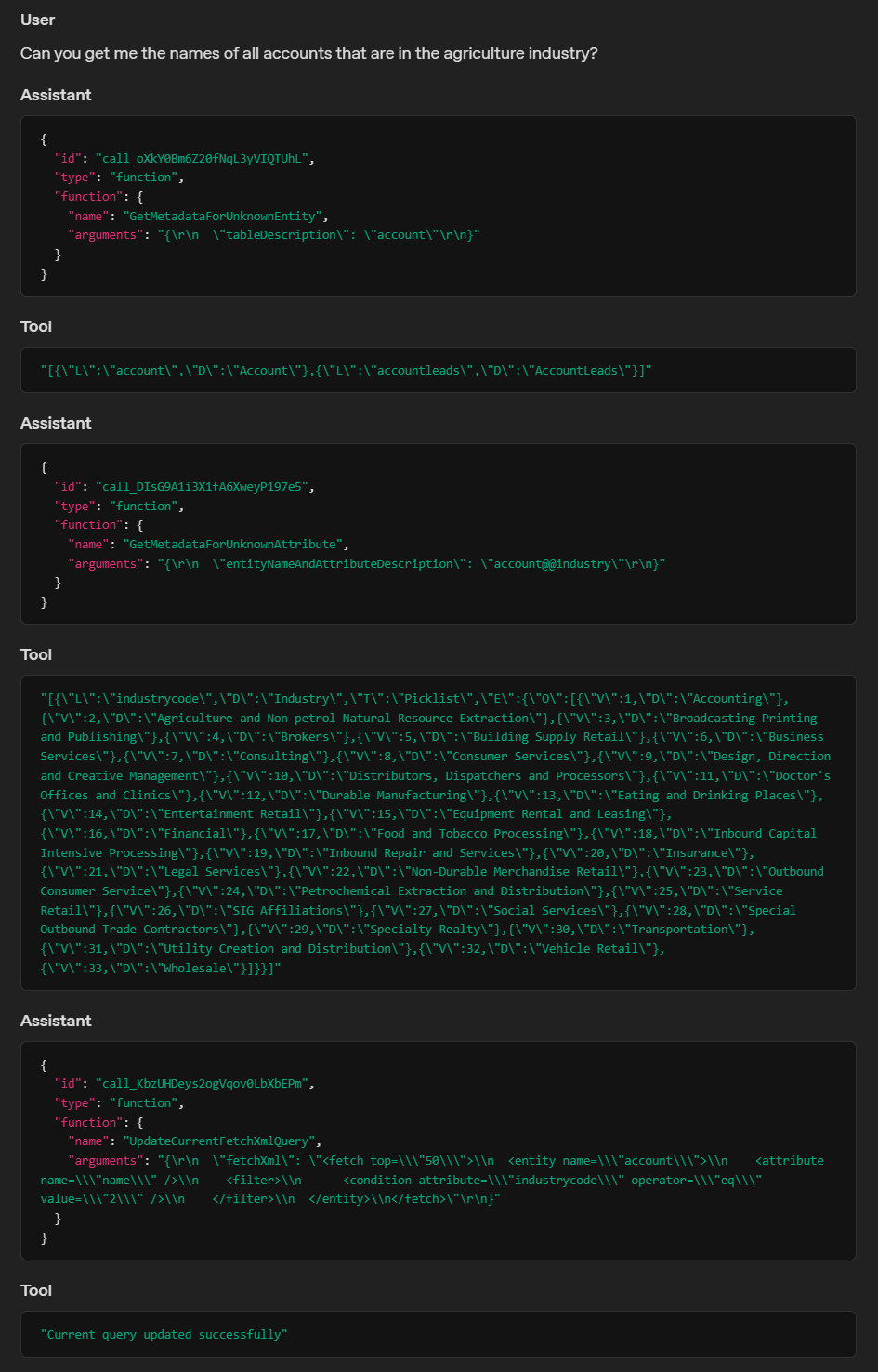

Then, the AI uses the function GetMetadataForUnknownAttribute to get the field matching the “industry” description, and the optionset value matching the description “agriculture”:

The AI correctly identifies that the field industrycode and the optionset value 2 - Agriculture and Non-petrol Natural Resource Extraction - matches the description. Also in this case it has made an internal call to the LLM to find the correct attribute.

If you want to check out the code for yourself, see these two repo:s in Jonas Rapp’s GitHub. And as mentioned above, check out his blog post on how to use AI in your own XTB plugins.

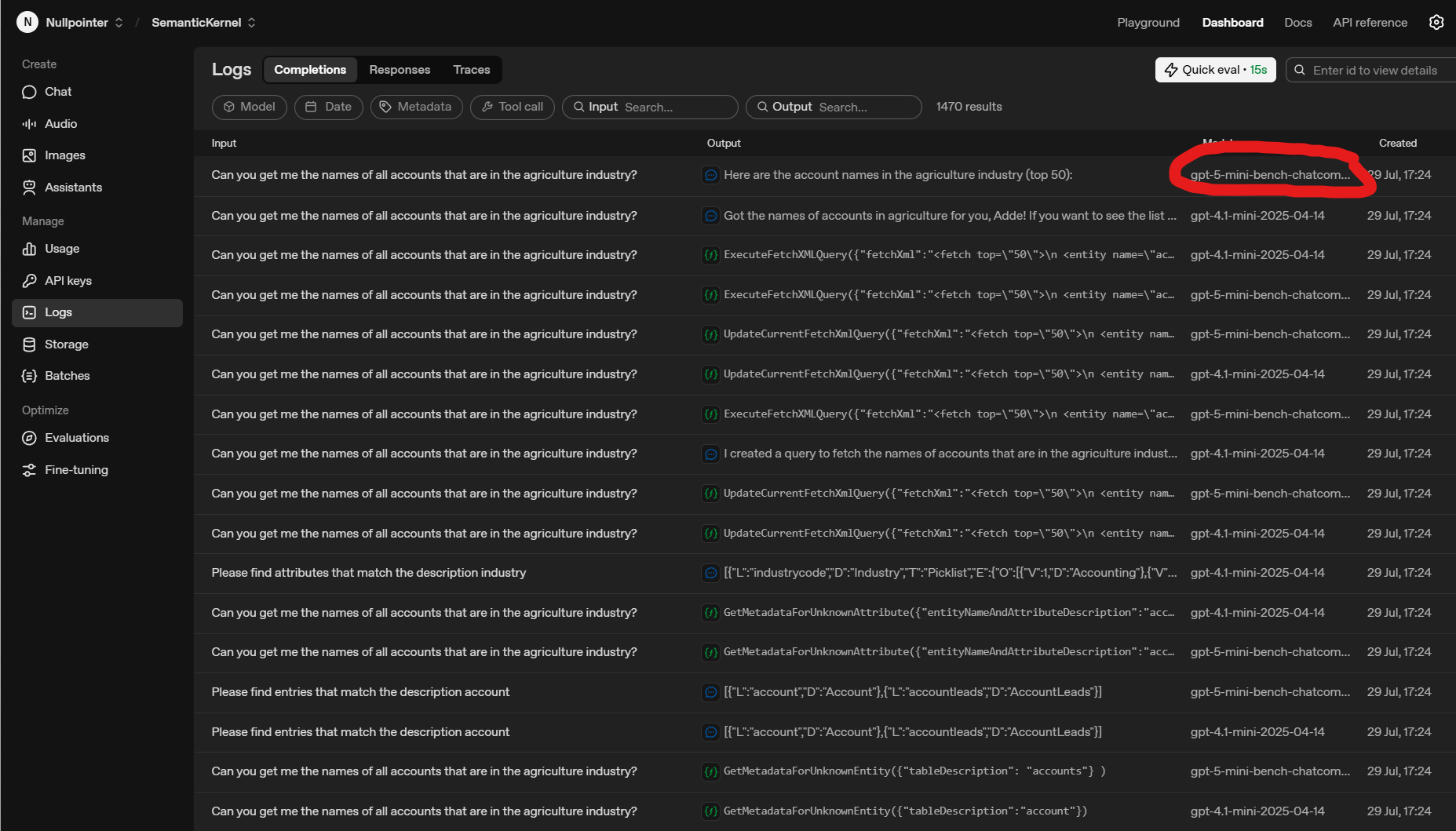

But there is one more thing… What is this, is OpenAI using our FXB requests to train their upcoming ChatGPT 5 model?? What is gpt-5-mini-bench-chatcompletions-gpt41m-api-ev3?

The plot thickens… Exciting times ahead for sure!